-

在图像处理领域中,单一传感器获取的图像通常只具备某一方面的信息,已无法满足市场需求,因此图像融合技术应运而生。红外图像主要依靠物体自身的热辐射进行成像,突出背景中隐藏的热目标,其不受光照条件、天气的影响,但对比度较低,纹理细节不丰富[1]。可见光图像通过反射可见光进行成像,纹理细节和对比度更适合人类的视觉感知,但可见光图像在烟雾、夜间等条件下的成像效果差[2]。基于此,两者融合后能够获得一幅既有可见光图像边缘、细节信息又有红外热辐射目标信息的互补融合图像。随着目标检测与识别、军事监视等应用需求的不断提高,红外与可见光图像的融合技术成为该领域研究的热点方向。安防领域,红外与可见光融合图像可准确识别黑暗环境、化妆打扮[3]、佩戴眼镜[4]等条件下的人脸,为商业应用、公安执法等提供便利需求;军事领域,红外与可见光融合图像可实现恶劣环境下隐藏目标的识别与跟踪[5];智能交通领域,红外与可见光融合图像应用于行人检测[6]、车辆识别与车距检测[7]、道路障碍物分类[8];农业生产领域,红外与可见光融合图像可应用于水果的成熟度检测[9]、植物的病态检测[10]等。

近几十年来,大量红外与可见光图像的融合方法相继被提出,并在实际应用中得到推广。目前的图像融合综述中,大部分文献都选择对整个图像融合领域进行综述,较少文献针对红外与可见光图像融合方法进行详细阐述;在部分阐述红外与可见光图像融合的综述中,只对现阶段的图像融合方法进行简要分析,没有与实际应用相结合,缺少应用实例。文中首先综述了红外与可见光图像常用融合方法的研究现状,其次概述了红外与可见光图像融合的主要应用,以及用于评价融合质量的性能指标,并针对选定的六个应用场景,选择九种该领域典型的融合方法和六个图像质量评价指标进行实验分析,最后对红外与可见光图像融合技术的发展与应用进行总结与展望。

-

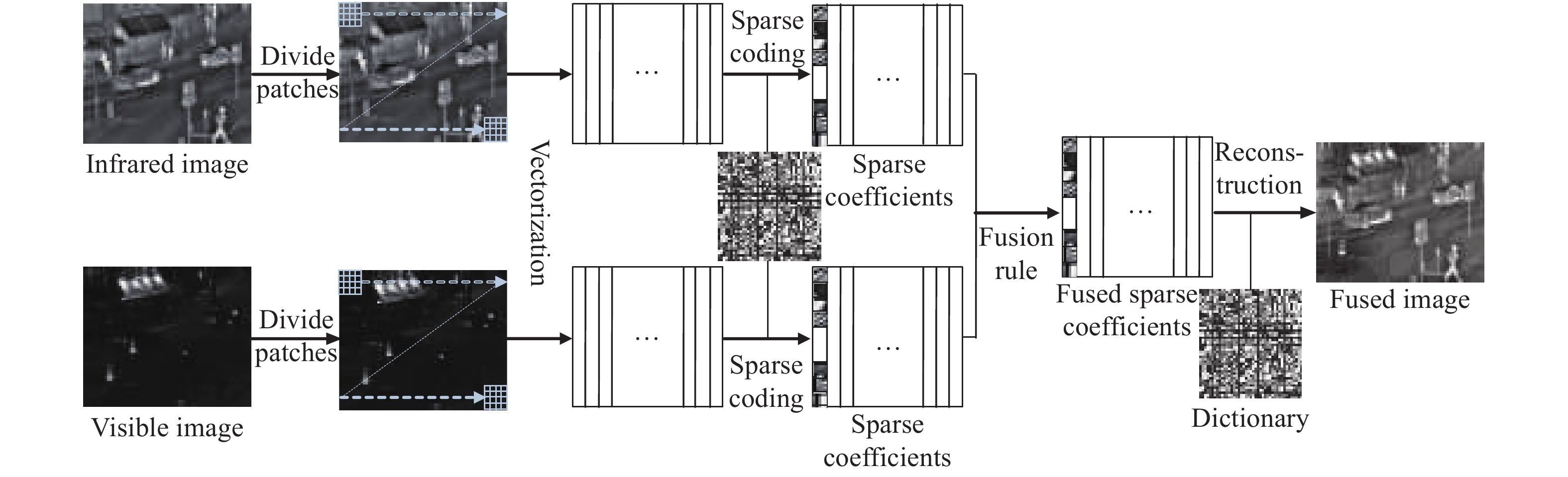

通过分辨率分解方法,基于变换域的图像融合方法可获取一系列包含不同层次的子图像,以保留更多的图像细节信息。多尺度变换是应用最为广泛的基于变换域的融合方法,其融合方法主要分为三个步骤:多尺度的正逆变换、融合规则的设计,具体融合框架流程如图1所示。

-

金字塔变换将图像分解成不同尺度且呈金字塔状的子带图像进行融合。拉普拉斯金字塔(Laplacian Pyramid,LP)变换[11]是最早提出的基于金字塔变换的融合方法,基于LP变换的成功应用,比率低通金字塔[12]、对比度金字塔(Contrast Pyramid, CP)[13]、形态学金字塔[14]和可控金字塔[15]等融合方法相继被提出。LP变换基于高斯金字塔获取的一系列差值图像虽然凸显了高频子带图像的细节特征信息,但存在图像对比度低,信息冗余等问题。基于彩色参考图像的假彩色融合[16]以及具备优越边缘表达能力的模糊逻辑[9]可改善LP变换的不足,增强图像融合效果。此外,具备人眼视觉系统图像感知特性的CP变换,可弥补LP变换图像对比度低的缺陷。但CP变换不具备方向不变性,可结合方向滤波器予以解决[17]。CP变换的改进还可从图像融合规则入手,例如基于改进区域能量的对比度金字塔算法[18]。

相比基于空间域的图像融合方法,金字塔变换作为最先发展起来的多尺度变换方法,在图像细节信息保留方面有较大提升。但金字塔变换属于冗余变换,各层数据之间相关性大,源图像差异较大的区域融合后易产生块效应,降低了算法的鲁棒性。同时,金字塔变换还存在源图像结构信息丢失严重和图像信噪比低的问题。

-

小波变换的概念最早由Grossman和Morlet[19]于1984年提出,之后Mallet[20]根据信号分解和重建的塔式算法建立了基于小波变换的多分辨率分解理论。小波变换具体包括离散小波变换(Discrete wavelet Transform, DWT)[21]、双树离散小波变换(Dual Tree Discrete Wavelet Transform, DT-DWT)[22]、提升小波变换[23]、四元数小波变换[24]和谱图小波变换[25]等。DWT通过滤波器组合实现源图像的多尺度分解,各尺度间独立性高,纹理边缘信息保留度高[26]。但DWT存在一些缺陷,具体包括振荡、移位误差、混叠以及缺乏方向选择性等[27]。DT-DWT利用可分离的滤波器组合对图像进行分解,解决了DWT缺乏方向性的问题,且具有冗余信息少,计算效率高的优势。但作为第一代小波变换,DT-DWT不适用于非欧式空间。提升小波变换是构造第二代小波变换的理想方法,可完全视为空间域的变换,其具有自适应设计强、可不规则采样等优点,融合视觉效果较好[23]。

与金字塔变换相比,小波变换不会产生图像块效应,具有高信噪比。此外,小波变换还具有完备的图像重构能力,降低了图像分解过程中的信息冗余。然而,其表达的是源图像中部分方向信息,仍会造成图像细节信息的丢失。

-

为获取图像方向信息,消除吉布斯现象,解决平移不变性等问题,Da等人[28]提出了非下采样轮廓波变换(Non-subsampled Contourlet Transform, NSCT),由于不存在采样过程,其解决了轮廓波变换的频谱混叠问题。此外,NSCT与模糊逻辑相结合能有效增强红外目标并保留可见光图像的细节[29]。NSCT与感兴趣区域的提取相结合,可成功凸显红外目标[30]。但是,NSCT的计算效率较低,无法满足高实时性的应用需求。

为满足高实时性需求,Guo等人[31]提出多尺度多方向性的剪切波变换,但其仍不具备平移不变特性。而非下采样剪切波变换(Non-subsampled Shearlet Transform, NSST)[32]不仅可以满足上述需求,且相比于NSCT,拥有更高的计算效率。优越的信息捕获和表示能力使NSST成为红外与可见光图像融合方法中的一种流行方法。Kong等人[33]在NSST融合方法的基础上,引入区域平均能量和局部对比度的融合规则,将空间域分析和多尺度几何分析的优点最大程度地融合在一起。NSST实质上属于冗余变换,为克服该不足,Kong等人[34]进一步在NSCT融合方法中提出快速非负矩阵分解,最终融合图像在保留源图像全部特征的同时降低了图像的冗余信息。

非下采样多尺度多方向几何变换所分解出的子带图像与源图像尺寸相同,这有利于源图像细节、纹理特征的提取,同时简化了后续融合规则的设计。但NSCT分解过程复杂,难以应用于实时性要求高的场景。NSST分解时由于引入了非下采样金字塔变换的方法,容易造成高频子带图像细节的丢失,还会降低图像亮度。

-

常用的多尺度变换的融合方法还有保边滤波器、Tetrolet变换、顶帽变换、哈尔小波变换等,其中保边滤波器的应用最为广泛,该方法将源图像分解为一个基本层以及一个或多个的细节层,在保持空间结构的同时还减少了融合图像的伪影现象。其中,导向滤波器在保留源图像边缘细节信息的同时还消除其块效应[35],局部边缘保持滤波器在保证图像全局特征的前提下,有效突显图像的细节信息[36]。

-

2010年,Yang和Li[37]提出了基于稀疏表示(Sparse Representation, SR)图像融合方法(融合框架如图2所示),其重点在于过完备字典的构造和稀疏系数分解算法[38]。

过完备字典的构造方式主要有两种:基于数据模型和基于学习算法的过完备字典。基于数据模型的过完备字典是利用特定的数学模型进行构建的,该方法虽然高效但难以应对复杂数据,可利用基于联合学习策略的平稳小波多尺度字典[39]和由过完备离散余弦字典与基函数相结合的混合字典[40]来解决此问题。而基于学习算法[38, 40-47]的过完备字典是通过训练样本集的方式构造的,常用的是基于最优方向法(Method of Optimal Directions, MOD)字典[48]和奇异值分解的稀疏字典训练(Kernel Singular Value Decomposition, K-SVD)字典[49]。该类字典具有较强的自适应性但也带来较高的计算量。因此,目前过完备字典的研究趋势倾向于综合两种构造方式优点的算法。起初,Rubinstein等人[50]设计了一种综合固定字典和学习字典的用于学习稀疏字典的稀疏K-SVD方法。后来,聚类补丁和PCA[51-53]、最优方向[46]、自适应稀疏表示[41]、在线学习方法[44, 54]、多尺度几何分析领域中的K-SVD字典[38, 55]等方法也成功应用于图像融合领域。

稀疏系数分解算法中,匹配跟踪(Matching Pursuit, MP)算法利用原子向量的线性运算从训练好的过完备字典中选择最佳线性组合的原子以表征图像信息,但其迭代结果只是次最优。正交匹配追踪(Orthogonal Matching Pursuit, OMP)算法[39, 56-57]在此基础上对MP算法进行改进,经OMP算法处理后的原子组合都已处于正交化状态。在精度要求相同的情况下,OMP算法的计算效率高于MP算法。此外,为解决OMP算法和MP算法融合规则设计难的问题,同步正交匹配追踪(Synchronous Orthogonal Matching Pursuit, SOMP)算法[39, 51, 58]基于OMP算法进行了原子集的改进,其可从不同的源图像中分解出相同子集的字典,从而简化了图像融合规则设计,在图像融合领域得到广泛应用。

稀疏表示与传统多尺度变换的图像融合方法相比有两大区别[59]:一是多尺度融合方法一般都是基于预先设定的基函数进行图像融合,这样容易忽略源图像某些重要特征;而基于稀疏表示的融合方法是通过学习超完备字典来进行图像融合,该字典蕴涵丰富的基原子,有利于图像更好的表达和提取。二是基于多尺度变换的融合方法是利用多尺度的方式将图像分解为多层图像,再进行图像间的融合,因此,分解层数的选择就尤为关键。一般情况下,为从源图像获取丰富的空间信息,设计者都会设置一个相对较大的分解层数,但随着分解层数的增加,图像融合对噪声和配准的要求也越来越严格;而稀疏表示则是利用滑窗技术将图像分割成多个重叠小块并将其向量化,可减少图像伪影现象,提高抗误配准的鲁棒性。

基于稀疏表示的图像融合方法虽然能够改善多尺度变换中特征信息不足和配准要求高的问题,但其自身仍存在一些不足。主要体现在:(1)过完备字典的信号表示能力有限,容易造成图像纹理细节信息的丢失;(2)“max-L1”融合规则对随机噪声敏感,这会降低融合图像信噪比;(3)滑窗技术分割出的重叠小块,降低了算法的运行效率。

-

神经网络在图像融合的应用始于脉冲耦合神经网络(Pulse Coupled Neural Network, PCNN)模型。与其他神经网络模型相比较,PCNN模型无需训练与学习过程,就可有效提取图像信息[60]。基于PCNN的红外与可见光图像融合方法通常会与多尺度变换方法结合,例如NSCT[61-66]、NSST[60]、曲波变换[67]、轮廓波变换[68]等。PCNN主要应用于图像融合策略中,最常见的两种方式是单独作用于图像的高频子带和同时作用于图像的高低频子带。例如在NSCT融合框架中,可采用自适应双通PCNN融合图像的高低频子带系数,也可将PCNN模型作为高频子带图像的融合规则,加权平均[62]、区域方差积分[63]等作为低频子带图像的融合策略。

基于深度学习的图像融合方法渐渐成为图像融合领域的主要研究方向,但其在异源图像融合的研究还处于一个初步发展的水平。深度学习方法是将源图像的深度特征作为一种显著特征用于融合图像的重建,卷积神经网络是目前最常用于图像融合的深度学习方法。Li[69]、Ren[70]都提出一种基于预训练的VGG-19(Visual Geometry Group-19)网络,以提取源图像的深层特征,获得较好的融合效果。2019年,Ma等人[71]首次将端到端的生成对抗网络模型用于解决图像融合问题,避免了传统算法手动设计复杂的分解级别和融合规则,并有效保留源图像信息。

PCNN模型中神经元与图像像素一一对应,解决了传统方法中图像细节易丢失的问题。但PCNN模型网络结构复杂,参数设置繁琐。同时,其与多尺度变换组合的方法,也只能实现模型的局部自适应,且计算速率和泛化能力仍有待提高。基于深度学习的图像融合可从图像数据中提取深层特征,实现模型的自适应融合,具有较强的容错性和鲁棒性。但其仍未得到广泛应用,原因主要有:(1)卷积神经网络的标签数据集制作难度大。在红外与可见光图像融合领域中,一般不存在标准参考图像,模型的ground truth无法得到准确的定义;(2)端到端模型损失函数的针对性不强。端到端模型虽然解决了卷积神经网络模型图像清晰度不高和需要标准参考图像的问题,但仍缺乏针对性损失函数的设计。

-

红外与可见光图像还可使用基于子空间的融合方法,主成分分析法[72]、鲁棒主成分分析法[73]、独立成分分析法[74]、NMF[75]等,一般而言,大部分源图像都存在着冗余信息,基于子空间的融合方法通过将高维的源图像数据投影至低维空间或子空间中,以较少的计算成本获得图像的内部结构。

混合模型通过结合各方法的优点以提高图像融合的性能,常见的混合模型有多尺度变换与显著性检测、多尺度变换与SR、多尺度变换与PCNN结合等。多尺度变换与显著性检测相结合的图像融合方法一般是将显著性检测融入多尺度变换的融合框架中,以增强感兴趣区域的图像信息。显著性检测的应用方式主要两种,权重计算[76-80]和显著目标提取[81-82]。权重计算是在高低频子带图像中获得显著性图,并计算出对应权重图,最终将其应用于图像重构部分。目标检测与识别等监视应用中常会采用显著目标提取,较有代表性的是Zhang等人[81]在NSST融合框架的基础上,利用显著性分析提取红外图像的目标信息。

多尺度变换存在图像对比度低,多尺度分解级别不易确定的问题;稀疏表示则表现出源图像的纹理和边缘信息趋于平滑,计算效率低等不足。基于此,将多尺度变换和稀疏表示结合的混合模型,通常可以取得这两者的最佳平衡,稀疏表示模型通常应用于多尺度分解后的低频子带图像[83]。此外,根据PCNN可充分提取图像细节信息的优点,多尺度变换还常与SR、PCNN结合在一起,低频选择基于SR的融合规则,高频选择基于PCNN的融合规则[84]。该混合模型有效提高了融合图像的清晰度和纹理特征,但在设计融合模型时,需统筹SR和PCNN的优缺点,以免模型过于复杂,增加计算成本。

-

图像融合规则的优劣直接影响图像融合效果。传统方法所采用的融合规则均为“高频绝对值取大,低频加权平均”。这两类融合规则均属于像素级的融合规则,绝对值取大法运算成本低,但容易造成图像信息的缺失,边缘尖锐等问题。加权平均法也会使得图像某些重要特征丢失,同时,如果算法的权重设置过于简单会导致融合图像的灰度值与源图像差异过大。许多学者会基于像素级的图像融合规则进行一定的改进,例如,基于对比度和能量的绝对值取大法[24],根据图像空间频率确定权重值的加权平均法[82],基于双边滤波器的加权平均法[25]等。

此外,常见的还有基于区域、基于矩阵分析的融合规则。由于人眼在观察图像时,关注的往往是区域级别的,区域分类法会更符合人眼视觉感知系统,同时该方法充分考虑各像素间的关系,图像的局部特征可得到进一步的体现。常见的有区域平均能量[85]、区域交叉梯度[86]等。矩阵分析法主要分为模糊逻辑[56, 87]和非负矩阵分解法[34],模糊逻辑常被应用到加权平均融合规则中以融合图像的高频子带,非负矩阵分解法利用分解后图像的像素值为非负值这一特点将图像分解为非负分量,以达到低运算复杂度和高清晰度的效果,其通常应用于图像的低频子带融合规则。

表1列举了红外与可见光图像常用的融合方法、融合策略、优缺点以及所适用的场景。表中根据各方法提出时间的顺序介绍金字塔变换、小波变换、非下采样多尺度多方向几何变换、稀疏表示、神经网络、混合方法六大类融合方法。

Fusion methods Specific methods Fusion strategies Advantages Limitations Applicable scenes Pyramid transforms Laplacian pyramid Fuzzy logic[9] Smoothing image edge;

Less time consumption;

Less artifactsLosing image details;

Block phenomenon;

Redundancy of dataShort-distance scenes with sufficient light, such as equipment detection Contrast pyramid Clonal selection algorithm[17];

Teaching learning based optimization[88];

Multi-objective evolutionary algorithm[89]High image contrast;

Abundant characteristic informationLow computing efficiency;

Losing image detailsSteerable pyramid The absolute value maximum selection(AVMS)[90];

The expectation maximization(EM) algorithm[91];

PCNN and weighting[92]Abundant edge detail;

Inhibiting the Gibbs effect effectively;

Fusing the geometrical and thematic feature availablyIncreasing the complexity of algorithm;

Losing the image detailsWavelet transform Discrete wavelet transform Regional energy[93];

Target region segmentation[21]Significant texture information;

Highly independent scale information;

Less blocking artifacts;

Higher signal-to-noise ratiosImage aliasing;

Ringing artifacts;

Strict registration requirementsShort-distance scenes, such as face recognition Dual tree discrete wavelet transform Particle swarm optimization[22];

Fuzzy logic and population-based optimization[94]Less redundant information;

Less time consumptionLimited directional information Lifting wavelet transform Local regional energy[23];

PCNN[85]High computing speed;

Low space complexity;Losing image details;

Distorting imageNonsubsampled multi-scale and multi-direction geometrical transform NSCT Fuzzy logic[29];

Region of interest[30]Distinct edge features;

Eliminating the Gibbs effect;

Better visual perceptionLosing image details;

Low computing efficiency;

Poor real-timeScenes with a complex background, such as rescue scenes NSST Region average energy and local directional contrast[33];

FNMF[34]Superior sparse ability;

High real-time

performanceLosing luminance information;

Strict registration requirement;

Losing image details of high frequencyCases need real-time treatment, such as intelligent traffic monitoring Sparse representation Saliency detection[44, 86-87];

PCNN[56, 95]Better robustness;

Less artifacts;

Reducing misregistration;

Abundant brightness informationSmoothing edge texture information;

Complex calculation;

Losing edge features of high frequency imagesScenes with little feature points, such as the surface of the sea Table 1. Comparison of infrared and visible image fusion methods

续表 1

Tab.1 ContinuedNeural network PCNN Multi-scale transform and sparse representation;

Multi-scale transformSuperior adaptability;

Higher signal-to-noise ratios;

High fault toleranceModel parameters are not easy to set;

Complex and time-consuming algorithmsAutomatic target detection and localization Deep learning VGG-19 and multi-layer fusion[69];

VGG-19 and saliency detection[70]Less artificial noise;

Abundant characteristic information

Less artifactsRequiring the ground truth in advance GAN[71] Avoiding manually designing complicated activity level measurements and fusion rules The visual information fidelity and correlation coefficient is not optimal Hybrid methods Multi-scale transform and saliency Weight calculation[76-80];

Salient object extraction[81, 82]Maintaining the integrity of the salient object region;

Improving the visual quality of the fused image;Reducing the noiseHighlighting saliency area inconsistently;

Losing the background informationThe surveillance application, such as object detection and tracking Multi-scale transform and SR The absolute values of coefficient and SR[38];

The fourth-order correlation coefficients match and SR[83]Retaining luminance information;

Excellent stability and robustnessPoor real-time Losing the image details -

目标识别一直是计算机视觉重要且热门的研究领域,图像融合技术能够对图像信息进行多层次、多方位的处理,可获得更为准确的图像信息和最佳视觉效果,为后期图像处理提供强有力支撑。随着商业应用、公安执法等对安全需求的不断提高,人脸识别技术已成为一个重要的研究领域。应用于面部的材料(如化妆品)会改变面部特征,从而导致在可见光下工作的生物特征人脸识别系统不能正常运作,但是红外热辐射是从皮肤组织自然散发出来的,不受化妆品的影响[3, 96]。如图3所示,化妆品严重影响可见光图像的成像,但是在红外热成像与偏振热成像中几乎没有影响,通过图像融合可以获得较好的人脸识别结果。实际应用中,安防领域还需要对中远距离的人脸进行识别,Omri等人提出基于奇异值分解和主成分分析的小波变换,该方法已被成功应用于远程自动人脸识别的产品中。类似的,遗传算法[97]也成功应用于该领域。此外,针对佩戴眼镜的特殊群体,由于红外图像对玻璃不具有穿透能力,所以常结合椭圆拟合方法[98]进行人脸识别。除基于脸部进行人脸识别外,红外与可见光图像融合后的图像还可根据其他特征实现人脸识别。例如,对人体进行全天候监测的基于人耳的全自动生物识别系统[99];人脸面部被遮挡情况下基于眼周区域的面部识别技术[100]。

-

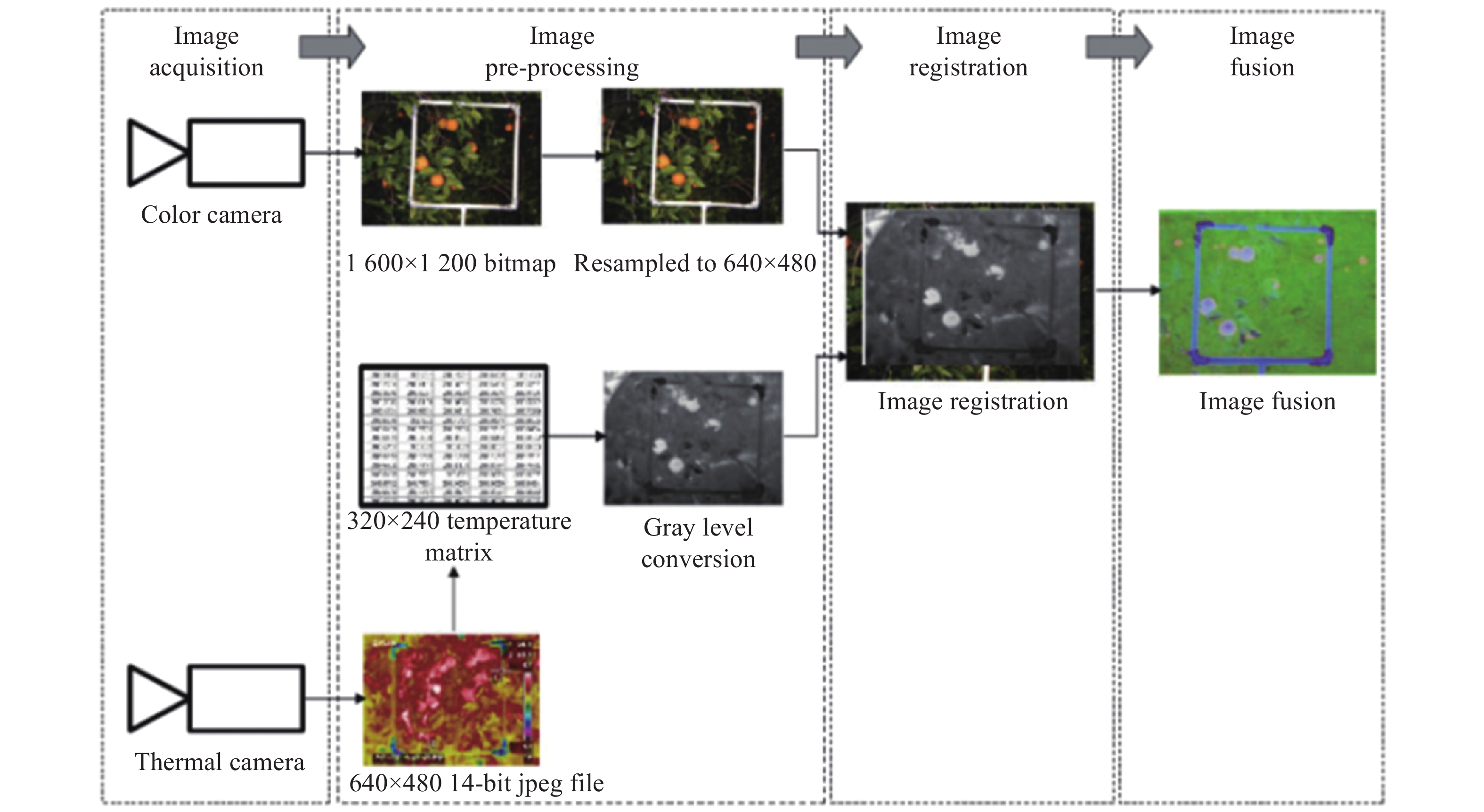

目标检测与目标识别的最大区别在于目标检测需要确定目标的准确位置。图像融合方法在目标检测的应用主要有两种:融合后检测、检测后融合。目前较为流行的方法为前者,可应用于提高农作物自动化采摘精度,克服强光下图像产生过曝问题,图4为该检测方法的图像处理框架[9]。此外,在智能交通领域中,先融合后检测方法可对行人或车辆进行有效避障[7];或是构建先获取源图像的检测结果后对图像进行分割融合的鲁棒性检测系统[6]。

目标跟踪一般通过处理视频或时间序列图像实现。针对目标的移动特性,Han等人[101]将目标的热辐射信息和移动信息作为显著性特征,实现目标的快速检测跟踪,增强监控应用中所需的可视化。目标跟踪与侦察行动中,通常需要具有成像传感器平台的机载设备,利用机载图像建立的红外与可见光图像融合系统,可实现全天候无间断工作,并实时突显目标信息[5]。与其他应用相比,目标跟踪更注重算法的实时性。融合质量高的复杂算法有时并不符合实际应用需求,所以在实际需求中,会采用较为简单的融合算法,例如利用比率低通金字塔降低计算成本[102]、均值滤波器和中值滤波器的两尺度图像分解方法[77]。但红外与可见光图像融合方法在目标检测与跟踪的实时性应用中还处于发展阶段,在硬件设施上的应用过少。

-

黑暗环境下的融合图像虽然可获得完整信息的图像,但人类视觉系统对彩色图像会更加敏感[103]。夜视监控需要清晰且色彩丰富的图像或视频,而基于彩色图像的红外与可见光融合图像是进行夜视监控的理想融合源。所以将彩色图像的颜色特征转移至融合图像,可增强夜视监控的对比度,突出目标信息,提供良好的视觉感知[104-105]。

在城市安全监控防护中,Davis等人[106]在图像融合方法中引入背景差分技术,构建的融合系统可呈现全天候彩色图像监控。在其他夜视监控应用中,常用的方法有利用DWT和目标区域分割建立的机载夜视监控系统[107],基于NSCT建立的夜视导航和监视方法[108],增强边缘信息的实时夜视监视系统[109],基于NMF和颜色转移技术的夜间视觉摄像机系统[110]等。在军事目标应用中,Hogervorst等人[111]利用颜色映射优化图像间的色彩匹配,生成更自然的融合图像。即使目标已进行伪装处理,该方法仍能在夜间将目标识别,因颜色映射过程简单快速,其已逐步应用于军事领域。如图5所示,图像(f)将伪装目标直观地表示出来,有效地突出了潜在监测对象。

-

红外和可见光图像融合技术的应用领域非常广泛,包括军事领域的彩色视觉增强、农业领域、医学成像领域、遥感领域等。农业领域,除上述对水果进行检测采摘外,还可以评估水果的硬度和可溶性固形物含量[112]、提高病态植物的早期检测准确性[10]。医学成像领域中,通过使用可调模式选择图像融合方法实时融合红外和可见光视频图像,有助于在术中评估关键胆管结构[113]。遥感领域,红外与可见光融合图像可应用于城市目标的空间信息[114]、气象预警[115]、高空无人机装备[116]及其他方面[117-118]。此外,红外与可见光的融合技术还可用于旧文档的视觉增强,提高旧文本的可读性[119-120]。目前大部分复杂算法注重提高融合质量,但在实际应用的缺乏一定的实用性,随着计算机成像技术、传感器技术的不断发展,一些低成本、高实时性的图像融合算法将会在更多领域应用[121-122]。

-

图像融合质量的评价方法主要分为主观法和客观法。主观法是将图像划分为五个等级,分别是“特别好”、“好”、“一般”、“差”和“特别差”。主观法属于定性分析,具有较强的主观意识,对于两幅融合效果较为相近的图像无法做出客观的判断,同时,相邻的评价级别没有明确的划分界限,存在着一定的不足。客观评价法是通过特定的公式计算图像的相关指标信息以对融合图像进行定量分析,主要分为无参考图像与有参考图像两类评价指标[123]。

常用的图像融合评价指标的定义及说明如表2、表3所示。设源图像的尺寸大小为M×N,其中A,B,S表示源图像,F和S表示融合图像和参考图像;µ为图像的灰度均值;pk表示像素值为k的概率(k=0,1,2,···,255)。设Z=A,B,S,F,R;i=1,2,···,M;j=1,2,···,N。其中,Z(i, j)表示图像Z的灰度值;ΔZ表示图像Z的差分;表示边缘信息量;PZ和PZZ分别表示图像的概率密度函数和图像间的联合概率密度;函数表示图像的边缘强度函数。

Evaluation indicators Definition Explanation IE[124] ${{IE} } = - \displaystyle\sum\limits_{i = 0}^{L - 1} { {p_i} } {\log _2}{p_i}$ Amount of information contained in an image increases as IE improves SD[125] ${{SD} } = \sqrt {\frac{1}{ {MN} }\displaystyle\mathop \sum \limits_{i = 1}^M \displaystyle\mathop \sum \limits_{j = 1}^N { {\left( {F\left( {i,j} \right) - \mu } \right)}^2} }$ Deviation between pixels and pixel mean is evaluated by SD, which improves with the increase of SD, resulting in improvement in contrast of images AG[126] ${{AG} } = \frac{1}{ {\left( {M - 1} \right)\left( {N - 1} \right)} }\displaystyle\sum\limits_{i = 1}^{M - 1} {\displaystyle\sum\limits_{j = 1}^{N - 1} {\sqrt {\frac{ {\left( {\vartriangle Z_i^2 + \vartriangle Z_j^2} \right)} }{2} } } }$ A wealth of detailed information is exhibited by a high value of AG which is used to reflect the gray variation of the image QAB/F[127] ${ {{Q} }^{{ {AB/F} } } } = \frac{ {\displaystyle\sum\limits_{i = 0}^{M - 1} {\displaystyle\sum\limits_{j = 0}^{N - 1} {\left( {Q_{\left( {i,j} \right)}^{AF}w_{\left( {i,j} \right)}^A + Q_{\left( {i,j} \right)}^{BF}w_{\left( {i,j} \right)}^B} \right)} } } }{ {\displaystyle\sum\limits_{i = 0}^{M - 1} {\displaystyle\sum\limits_{j = 0}^{N - 1} {\left( {w_{\left( {i,j} \right)}^A + w_{\left( {i,j} \right)}^B} \right)} } } }$ Fusion effect of image exhibits better as the value of QAB/F which is used to evaluate the transfer of edge information, approaches 1 MI[2] $\begin{array}{l}{I_{ { {FA} } } }(i,j) = \displaystyle\sum\limits_{i = 1}^{M - 1} {\displaystyle\sum\limits_{j = 1}^{N - 1} { {P_{ { {FA} } } }\left( {i,j} \right)} } {\log _2}\dfrac{ { {P_{FA} }\left( {i,j} \right)} }{ { {P_F}\left( i \right){P_B}\left( j \right)} }\\MI_{AB}^F = {I_{ { {FA} } } } + {I_{ { {FB} } } }\end{array}$ Amount of information preserved in an image increases

with the improvement of MI which is utilized to characterize

inheritance of image informationCC[128] ${{CC} } = \frac{ {\displaystyle\sum\limits_{i = 1}^M {\displaystyle\sum\limits_{j = 1}^N {\left[ {\left( {F\left( {i,j} \right) - {\mu _F} } \right) \times \left( {S\left( {i,j} \right) - {\mu _S} } \right)} \right]} } } }{ {\sqrt {\displaystyle\sum\limits_{i = 1}^M {\displaystyle\sum\limits_{j = 1}^N {\left[ { { {\left( {F\left( {i,j} \right) - {\mu _F} } \right)}^2} } \right]\displaystyle\sum\limits_{i = 1}^M {\displaystyle\sum\limits_{j = 1}^N {\left[ { { {\left( {S\left( {i,j} \right) - {\mu _S} } \right)}^2} } \right]} } } } } } }$ Similarity between images improves as CC increases,

thereby preserving more image informationTable 2. Evaluation index without reference image

Evaluation indicators Definition Explanation SSIM[129] $SSI{M_{RF}} = \displaystyle\prod\limits_{i = 1}^3 {\dfrac{{2{\mu _R}{\mu _F} + {c_i}}}{{\mu _R^2 + \mu _F^2 + {c_i}}}} $ Similarity between source image and fusion image enhances with the increase of SSIM which is used to measure image luminance, contrast and structural distortion level RMSE[2] $RMSE = \sqrt {\dfrac{1}{{M \times N}}\displaystyle\sum\limits_{i = 1}^M {\displaystyle\sum\limits_{j = 1}^N {{{\left[ {R\left( {i,j} \right) - F\left( {i,j} \right)} \right]}^2}} } } $ Performance indicators of images promote with the reduction of RMSE PSNR[2] $PSNR = 10 \cdot \lg \dfrac{{{{\left( {255^2 \times M \times N} \right)}}}}{{\displaystyle\sum\limits_{i = 1}^M {\displaystyle\sum\limits_{j = 1}^N {{{\left[ {R\left( {i,j} \right) - F\left( {i,j} \right)} \right]}^2}} } }}$ The distortion of images decreases as the improvement of PSNR using to

evaluate whether the image noise is suppressedTable 3. Evaluation index based on reference image

无参考图像的评价指标又可以分为基于单一图像的评价指标和基于源图像的评价指标。基于单一图像的图像评价方法是基于最终融合图像所进行的图像性能评价,包括信息熵(Information Entropy, IE)、标准差(Standard Deviation, SD)、平均梯度(Average Gradient, AG)、空间频率等,其通过不同的方式度量融合图像本身的信息量、灰度值分布等。IE和SD分别通过统计图像灰度分布和度量像素灰度值相较于灰度均值的偏离程度来反映融合图像的信息量。AG和空间频率反映图像的灰度变化率和清晰度。基于源图像的评价指标通常只考虑图像的某一统计特征,与主观评价的结果有出入。无参考图像的评价指标还有一类是基于源图像进行衡量,主要从信息论的角度出发,度量融合图像从源图像处所提取的信息。常用的有互信息(Mutual Information, MI)、相关系数(Correlation Coefficient, CC)以及边缘信息传递量的QAB/F。此外,还有从信息熵引申出来的交叉熵、联合熵,IE反映的仅仅是融合图像的信息量,无法说明图像的整体融合效果,而交叉熵和联合熵可弥补该不足。

基于参考图像的评价指标是通过比较源图像与标准参考图像间灰度值、噪声等的差异以评价其性能。主要包括结构相似度(Structural Similarity, SSIM)、均方根误差(Root-Mean-Square Error, RMSE)、峰值信噪比(Peak Signal-to-Noise Ratio, PSNR)等。SSIM是通过比较图像间的亮度、对比度、结构失真水平的差异性来评价图像的性能;RMSE、偏差指数和扭曲程度都是通过比较图像间像素的灰度值进行评估;PSNR是通过度量融合图像的噪声是否得到抑制来评价图像的质量。在实际的图像融合过程中,往往没有参考图像作为一个标准,所以该评价方法目前还未大规模应用。

-

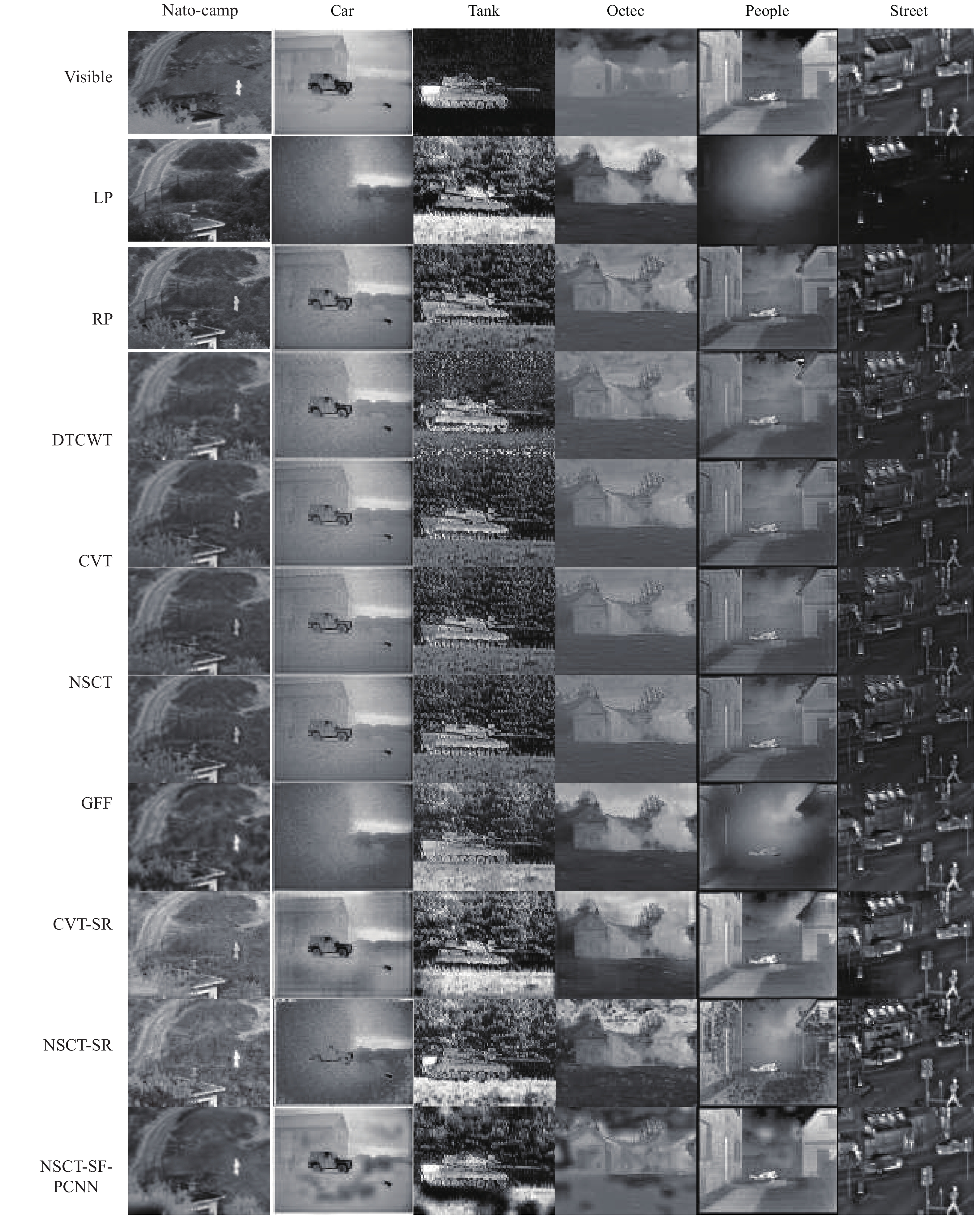

文中选取六个不同应用场景的红外与可见光图像,分别为野外战营(Nato-camp)、车辆(Car)、坦克(Tank)、烟雾场景(Octec)、士兵行人(People)和街道(Street),九种较有代表性的融合方法以及六种评价指标进行实验对比分析。图6所选取的融合方法从上至下分别为:LP[11]、RP[38]、DTCWT[130]、曲波变换(Curvelet Transform, CVT)[130]、NSCT[130]、引导滤波器(Guided Filtering-based Fusion, GFF)[38]、CVT-SR[38]、NSCT-SR[38]、NSCT-SF-PCNN[131]。评价指标分别是IE、SD、MI、AG、边缘相似度(Edge-association)和QAB/F六种,如图7所示。其中,融合方法均采用公开代码,所设参数与原文献一致。具体表现为:所有多尺度变换方法的分解层数均为4层;稀疏表示方法中滑动窗口的步长设置为1,字典大小为256,稀疏系数分解算法K-SVD的迭代次数固定为180次。除混合方法外,所有算法都采用“高频绝对值取大,低频加权平均”的融合规则。其余参数详见对应参考文献。

从图6的视觉效果来看,LP方法在Car中出现房子的细节丢失,Octec图像的对比度、亮度低,Street整体的融合视觉效果良好,但部分细节出现块现象;RP方法在六个场景中的融合视觉效果均不如LP方法;DTCWT、CVT、NSCT、CVT-SR整体的融合效果良好;GFF方法在Car和People中的融合效果较差,车辆和人的轮廓信息大部分被烟雾遮挡;NSCT-SR在两幅图像中出现图像块效应;NSCT-SF-PCNN除在Nato-camp表现出良好的融合效果外,在其余的图像对中没有表现出优越的融合效果。

从图7的客观评价指标来看,RP方法在AG指标中数值最高,在对图像清晰度有高要求的实际应用中,可考虑应用此方法;DTCWT方法整体的SD最低,QAB/F和边缘相似度的效果较好,说明基于DTCWT方法的图像对比度低,边缘信息保留度高;NSCT以及它的混合方法NSCT-SR具有较高的IE、MI,说明基于NSCT的方法及其改进方法有利于图像信息的保留,并可用于场景复杂的图像中;GFF方法与NSCT-SR所获得的最佳评价指标值相似,但其不适用于烟雾场景中;CVT-SR方法可获得良好的IE和AG,由此观之,基于SR的融合方法可提高图像的IE和AG;NSCT-SF-PCNN方法在所选取的六个实验场景中,在六个评价指标中都没有突出效果,究其原因,可能是该方法的融合规则过于简单,或是所选取的图像场景不适用于此方法。

九种融合方法在六种典型场景中的实验结果表明,场景对图像融合效果的影响显著,根据实际场景选择合适的融合方法可以提高算法的实用性(表1)。金字塔变换和小波变换的融合算法简单,适用于光线充足、近距离拍摄的场景,如设备的无损检测。NSCT信息保留度高,适用于背景复杂的场景,如人员搜救。NSST实时性高,可用于机载夜视监控、农业自动化、智能交通等视频融合领域。具有高对比度的GFF融合方法,可应用于夜视环境下目标的侦察与识别,但不适用于烟雾场景。对烟尘透过能力强的CVT-SR在实际的森林火灾防控、火灾报警中具有一定的应用前景。基于神经网络的融合方法具有较强的自适应性,可用于复杂环境下的目标检测。

-

文中综述了目前常用的红外与可见光图像融合方法的发展进程及应用研究,重点阐述基于多尺度变换、稀疏表示、神经网络等方法的核心思想、发展进程、优势不足,并对常用的融合方法进行总结对比;介绍了红外与可见光图像融合方法的应用领域,包括目标识别、目标检测与跟踪、夜视监控等;总结了九种目前较常使用的图像融合评价指标;最后针对六种典型场景,选择代表性的融合方法和六种评价指标进行实验对比。

针对当前红外与可见光图像融合算法存在的问题,有三方面改进建议:

(1)基于多尺度变换的图像融合方法虽然已经成为图像融合领域的热门研究方向,但还可从固定基函数和分解层数的自适应设计方面进一步改进算法;(2)未来可根据不同场景的特点,对不同融合方法进行组合式创新。但在构建混合模型时,还需综合考虑算法的性能表现;(3)基于深度学习的融合方法是未来的重点研究方向。近年来提出的端到端网络模型解决了卷积神经网络模型中存在的大部分问题,但还需根据红外与可见光图像成像原理来设计有针对性的损失函数,并在不同场景中采集大量的数据集,从而进一步提高该模型的泛化能力。

针对红外与可见光图像融合应用方面的挑战,可从以下方面进一步探索:

(1)提高实际场景中红外与可见光图像的配准精度,将空间变换设为变量因素,实现配准和融合的同步,以减少伪影现象;(2)减少红外图像的噪声,引入显著性检测算法,提取主要红外目标减少噪声干扰,或设计多孔径成像系统并对所得红外图像进行超分,在提高图像分辨率的同时扩大视场范围;(3)提高融合算法的实时性,将并行运算应用于图像融合领域,实现算法时间和空间上的并行,从而提高运行效率。

Research progress of infrared and visible image fusion technology

doi: 10.3788/IRLA20200467

- Received Date: 2021-04-10

- Rev Recd Date: 2021-05-15

- Available Online: 2021-09-30

- Publish Date: 2021-09-23

-

Key words:

- infrared image /

- visible image /

- image fusion /

- multi-scale transform /

- sparse representation /

- neural nework

Abstract: Infrared and visible image fusion combines the infrared thermal radiation information and visible detail information. The image fusion technique has facilitated development in numerous fields, including production, life sciences, military surveillance and others, and has become a key research direction in the field of image technology. According to the core idea, fusion framework and research progress of image fusion methods, the fusion methods based on multi-scale transformation, sparse representation, neural network, etc. are elaborated and compared, and the application status of infrared and visible light image fusion in various fields and the commonly used the evaluation index. The most representative methods and evaluation indicators are selected and applied to six different scenes in order to verify the advantages and disadvantages of each one. Finally, the existing problems of infrared and visible image fusion methods are experimentally analyzed and summarized , the development prospects of infrared and visible image fusion technology are presented.

DownLoad:

DownLoad: