-

合成孔径雷达(SAR)图像解译在军事和民用领域发挥着重要作用。其中,SAR自动目标识别(ATR)由于在战场侦察方面的巨大潜力而得到广泛研究[1]。作为SAR ATR中的一个重要环节,SAR目标方位角估计旨在获得待识别的SAR图像切片中的目标方位角,从而为目标识别提供先验知识[2-3]。提高SAR目标方位角估计的效率和精度有利于提高后续目标算法的性能。

传统的SAR目标方位角估计方法主要是基于分割得到的目标二值区域。通过考察目标区域的外形分布估计目标方位角,典型的方法包括惯性矩法[4]、主导边界法[4-7]和主轴法[8-10]等。然而,目前SAR目标分割算法仍不够成熟,因此目标区域分割的精度十分有限。同时,这种基于目标二值区域的方法往往存在180°模糊问题[4],从而导致更显著的估计误差。参考文献[11-12]将稀疏表示引入到SAR图像方位角估计中。其基本思想为:与待估计样本具有相近方位角的训练样本可以最佳重构待估计样本,因此方位角估计值为具有最小重构误差的训练样本的方位角。相比基于目标二值区域的方法,基于稀疏表示的方法效率较高,避免了繁杂的目标分割过程。这种通过单一样本重构并估计目标方位角的方法稳定性较差,难以得到可靠的估计结果。参考文献[13]根据SAR图像方位角的敏感性计算待估计样本与所有训练样本的图像相关系数,并根据对相关性曲线的分析求解估计方位角。

文中提出基于块稀疏表示的SAR图像目标方位角估计方法。SAR图像一般具有方位角敏感性[11-13],即同一目标在两个差异较大的方位角下获得的SAR图像可能存在十分显著的差异。因此,待估计样本在与训练样本进行相似度度量时,仅仅与其具有接近方位角的样本具有参考意义。在稀疏表示的条件下,其求解得到的非零元素一般对应这部分训练样本。当字典中的训练样本按照方位角(升序或者降序)进行排列时,稀疏系数中的非零元素具有块特性,即聚集在一定的方位角区间内。为此,文中采用块稀疏贝叶斯学习(Block Sparse Bayesian Learning, BSBL)[14-15]求解具有块稀疏特性的表示系数。在此基础上,利用各个块内的系数对待估计样本进行重构并选择具有最小重构误差的块进行方位角估计。基于块内的系数大小按照线性加权融合的机理综合得出最终的方位角估计结果。文中的方位角估计方法采用了稀疏表示的基本思想,与传统基于区域特征的方法相比可取得更高的运算效率。相比参考文献[11-12]中的传统稀疏表示方法,文中方法具有下列优势:第一,充分考虑了SAR图像中目标的方位角敏感性,并通过字典有序排列的方式体现出这一特性。后续的块稀疏贝叶斯学习可以很好地利用稀疏表示系数的块特性从而提高了求解精度。第二,在具体的方位角估计中,综合了一个方位角区间内的表示系数,相比利用单一样本的方法具有更高的精度和稳健性。采用MSTAR数据集中三类目标的SAR图像进行了方位角估计实验,并通过与其他几类方位角估计方法进行对比验证了所提出方法的有效性。

-

稀疏表示[11-15]是基于压缩感知理论的一种新的信号处理方法,在图像处理领域得到了广泛运用。其基本思想是用各类训练样本构成的全局字典对测试样本

${\boldsymbol{y}}$ 进行线性表示,如公式(1)所示:式中:

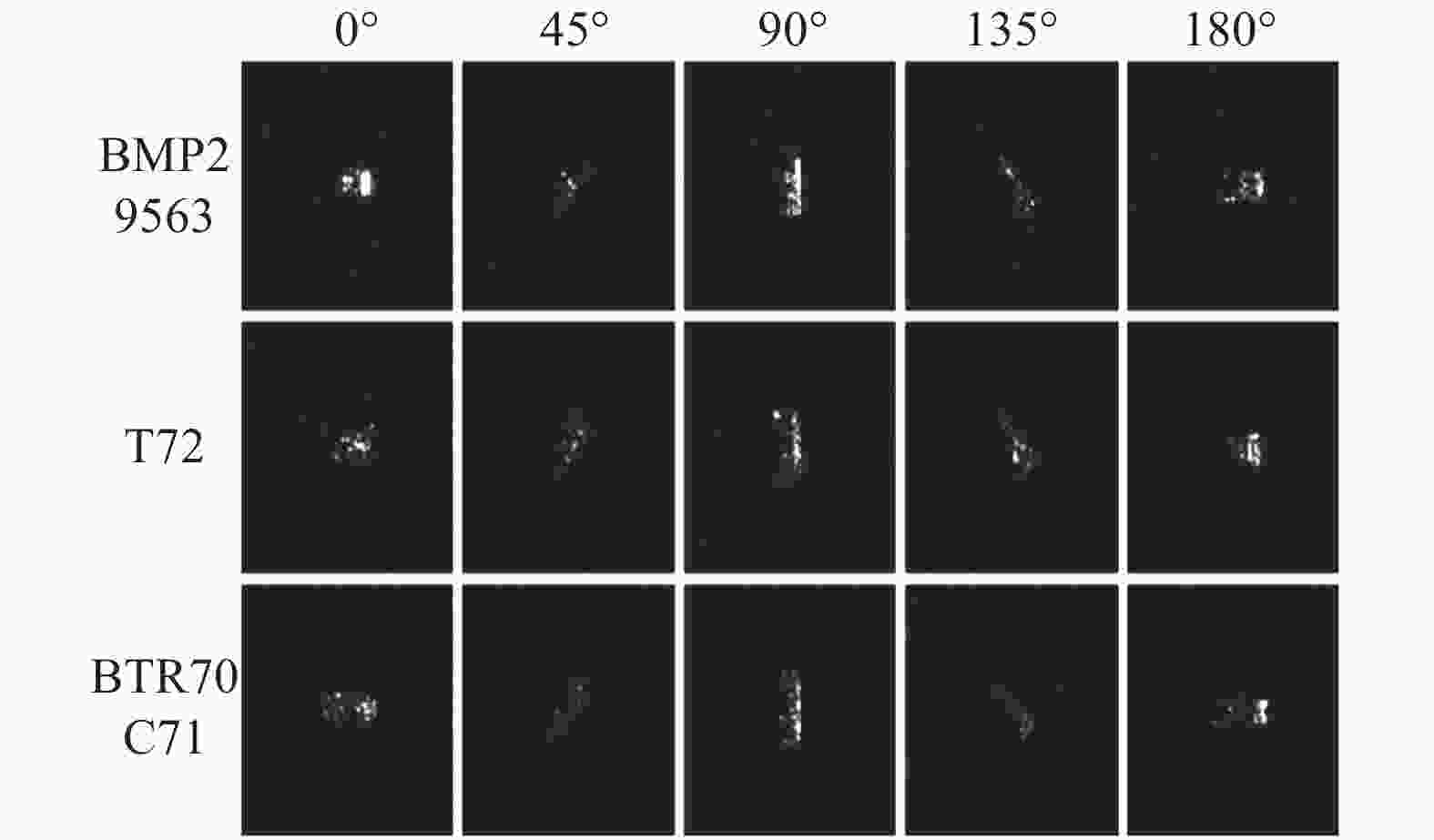

${\boldsymbol{A}} = [{{\boldsymbol{A}}_1},{{\boldsymbol{A}}_2}, \cdots ,{{\boldsymbol{A}}_C}] \in {{{R}}^{d \times N}}$ 代表各个类别组成的全局字典,${{\boldsymbol{A}}_i} \in {{{R}}^{d \times {N_i}}}$ 包含了第$i$ 个训练类的${N_i}$ 个样本;${\boldsymbol{\hat x}}$ 表示线性系数矢量;$\varepsilon $ 为重构误差大小。公式(1)中涉及${l_0}$ 范数,该优化为非凸优化问题,难以直接求解。因此,通常将其近似为${l_1}$ 范数优化进而转变为凸优化问题;也可以采用贪婪算法求解,如正交匹配追踪算法(Orthogonal Matching Pursuit,OMP)获得近似解。上述表示系数的大小体现了测试样本与不同类别中训练样本的相似性。具体应用到方位角估计中,根据SAR图像的方位角敏感性,那些与测试样本高度相关的样本实际上与测试样本保持接近的方位角。如图1所示,不同方位角下的三类MSTAR目标的SAR图像均具有较为明显的特性差异。参考文献[11-12]中提出的基于稀疏表示的方位角估计方法就是选用具有最小重构误差的训练样本得到估计方位角。实际上,SAR图像的相关性可以在一定的方位角区间内保持[13],因此综合一个区间内结果有利于得到更稳健的估计结果。

假定全局字典内各类别字典都是按照方位角由小到大排列,非零表示系数则集中在一定的方位角区间内,此时的线性表示系数具有块稀疏特性。为了得到更为准确的

${\boldsymbol{\hat x}}$ ,文中选用块稀疏贝叶斯学习[14-15]求解公式(1)。具有块稀疏特性的系数矢量如下式所示:其中,

${\boldsymbol{x}}$ 包含$g$ 个分块,但其中只有少量的分块包含非零元素。为了充分发掘这种块稀疏特性,块稀疏贝叶斯学习[13]采用高斯分布系数矢量建模如下:式中:参数

$\gamma {}_i$ 和${\boldsymbol{B}}{}_i$ 分别描述置信度水平和块内的相关性。假定各个分块相互独立,则公式(3)可以重新描述为下式:式中:

$\varGamma$ 为块对角矩阵,其第$i$ 分量为${\gamma _i}{B_i}$ 。观测样本

${\boldsymbol{y}}$ 按照下式建模:式中:

${\boldsymbol{n}}$ 代表噪声项。采用均值为零方差为${\;\beta ^{ - 1}}$ 的高斯分布建模。由此,${\boldsymbol{x}}$ 的概率密度函数为:块稀疏贝叶斯学习通过迭代的方式进行求解,根据估计得到的超参数

$\{ \gamma {}_i,{\boldsymbol{B}}{}_i\} $ 以及$\;\beta $ , 获得块稀疏系数的最大后验估计如公式(7)所示。具体的求解过程可参见参考文献[14-15]。 -

根据稀疏表示的优化求解结果,分别在各个非零表示系数的分块内重构测试样本,如下式所示:

式中:

${\delta _i}({\boldsymbol{\hat x}})$ 代表将${\boldsymbol{\hat x}}$ 中除了第$i$ 块的其他系数都置为0。按照最小误差的准则选取进行方位角估计的候选区间:记第

$K$ 分块中的方位角序列为$[{\theta _1},{\theta _2}, \cdots ,{\theta _M}]$ ,对应的系数大小为$[{r_1},{r_2}, \cdots ,{r_M}]$ 。文中采用线性加权的方法得到方位角估计值。根据系数大小计算对应方位角的权值如下式所示:按照公式(11)中线性加权的方法得到估计的方位角。

相比采用单个样本估计方位角的方法,这种联合整个方位角区间的方法既可以避免选择单个样本时可能出现的扰动误差,又可以通过加权综合的方式得到更为稳健的估计值。

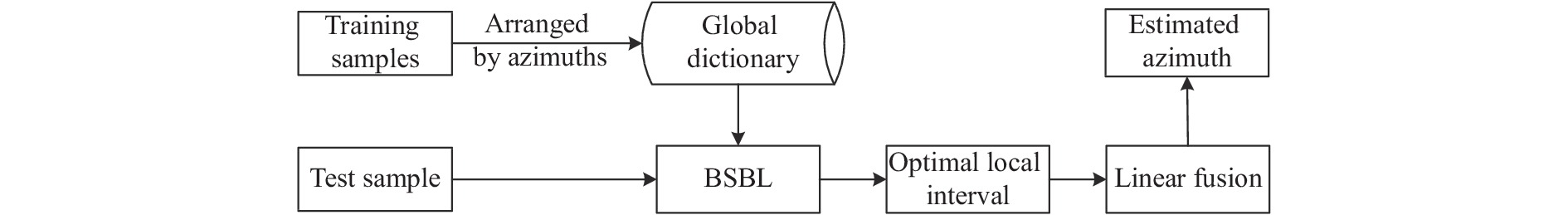

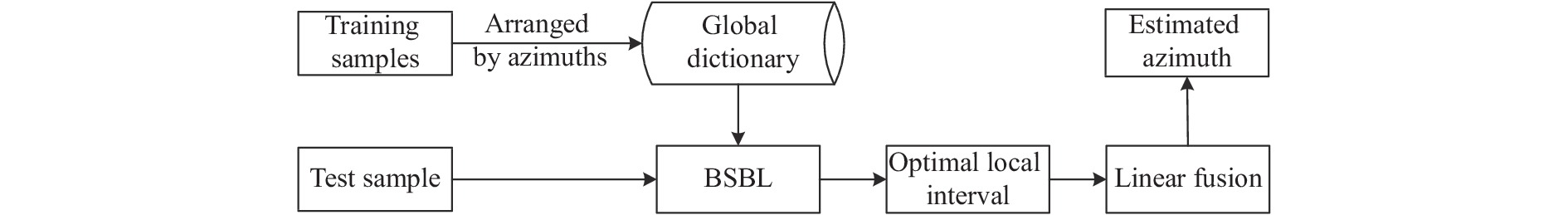

根据以上分析,文中方法的基本流程如图2所示。具体可以描述为以下步骤:

Step 1:对训练样本按照方位角顺序从小到大排列,构成全局字典;

Step 2:对待估计的测试样本在字典上进行块稀疏重构,采用块稀疏贝叶斯算法求解稀疏表示系数;

Step 3:分别在各个分块区间内重构测试样本,根据最小重构误差选择候选区间;

Step 4:在候选区间内按照公式(10)、(11)的线性加权方法得到目标方位角的估计值。

-

实验基于MSTAR数据集中三类目标的SAR图像开展,具体样本情况如表1所描述。其中,BMP2和T72均包括三个型号(不同型号采用Serial number (SN) 进行区分),BTR70仅有一个型号。测试样本采集于15°俯仰角,训练样本来自于17°俯仰角。训练样本的真实方位角可以从MSTAR图像的原始记录数据中读取,作为方位角估计精度计算的参考值。为了更好地体现提出方法的有效性,后续实验中将其与参考文献[4]中的最小外接矩形(Minimum enclosing rectangle,MER)法、参考文献[5]中的主导边界(Dominant boundary)法以及参考文献[11]中的基于稀疏表示(Sparse representation)的方法进行对比。

Type Depression angle/(°) Target BMP2 BTR70 T72 Training 17 233 (SN_1)232 (SN_2)233(SN_3) 233 (SN_1) 232 (SN_1)231 (SN_2)233 (SN_3) Test 15 195 (SN_1)196 (SN_2)19 6(SN_3) 196 (SN_1) 196 (SN_1)195 (SN_2)191 (SN_3) Table 1. Training and test samples of the three MSTAR targets

-

采用文中提出的算法对三类目标的测试样本进行方位角估计,通过对比方位角估计值与其真值得到各个测试样本的估计误差。表2统计了提出方法的方位角估计结果,其中认为估计角度与真值误差在±10°以内为正确估计,否则为错误估计。在此条件下,提出方法可以正确估计99%以上的测试样本中的目标方位角,这一结果充分证明了提出方法的高性能。表3进一步对提出方法的估计精度进行细化分析,按照误差区间统计分布情况,结果表明提出方法对测试样本的估计误差多数能够控制在5°以内,表明其具有很高的正确率和估计精度。更为细致的误差分布情况如图3所示,多数测试样本的方位角估计误差能够控制在2°范围内,进一步表明提出方法不仅能够取得高精度,还能保持很强的稳健性。

Target class Number of samples Number of errors Percentage of correct samples BMP2 (SN_1) 195 1 99.49% BMP2 (SN_2) 196 0 100% BMP2 (SN_3) 196 1 99.50% BTR70 (SN_1) 196 2 98.98% T72 (SN_1) 196 3 98.47% T72 (SN_2) 195 1 99.49% T72 (SN_3) 191 0 100% Table 2. Azimuth estimation results of the test samples of the three MSTAR targets by the proposed method

Number of samples <5° <10° Mean Variance BMP2 587 573 585 2.01 1.85 BTR70 196 192 194 2.05 1.84 T72 582 569 578 2.16 1.77 Total 1365 1334 1357 2.07 1.81 Table 3. Results of the proposed method at different estimation precisions

表4对比了提出方法与其他方位角估计方法的总体性能。在要求的不同方位角估计误差约束下,提出方法的性能显著优于基于几何形状特征的最小外接矩形法和主导边界法。同时,提出方法还可以有效克服上述两类方法中存在的180°模糊问题。对比稀疏表示方法,提出方法性能具有优势,特别是在方位角估计误差要求很小的情况下,这种优势体现得更为明显。由于块稀疏贝叶斯学习考虑了SAR图像固有的方位角敏感性,可以更为准确地定位测试样本的方位角区间。通过文中的区间方位角加权方法,有利于得到更为准确的估计结果。表5对比了各类方法的效率,即估计单个测试样本所需的时间。提出方法的时间消耗最小,证明了其高效性。传统的基于目标二值区域的方法需要首先进行较为繁琐的图像预处理和目标分割,因此需要更多的时间消耗。相比基于稀疏表示的方法,文中采用的块稀疏贝叶斯学习效率更高,从而估计算法的效率更高。这些实验结果充分证明了采用块稀疏贝叶斯学习求解稀疏表示系数以及联合方位角区间估计方位角的有效性和稳健性。

Figure 3. Numbers of correctly estimation samples by the proposed algorithm at different estimation precisions

Method type Threshold of error/(°) 2 4 6 8 10 Proposed 76% 88% 97% 99% 99% MER 13% 24% 39% 57% 68% Dominant boundary 55% 82% 93% 97% 99% Sparse representation 64% 80% 93% 98% 99% Table 4. Correct estimation percentages of different methods at different estimation precisions

Method type Average time consumption/ms Proposed 10.5 MER 45.2 Dominant boundary 40.2 Sparse representation 12.1 Table 5. Time consumption of different methods

-

文中提出了基于块稀疏贝叶斯学习的SAR目标方位角估计方法,考察全局字典上稀疏表示系数的块分布特性,采用块稀疏贝叶斯学习求解系数,然后通过综合具有最小重构误差的方位角区间得到稳健的方位角估计值。相比传统基于目标区域的估计方法,文中方法的估计精度更高,整体更具高效性。与基于稀疏表示的方位角估计方法相比,文中方法充分利用了SAR目标的方位角敏感性并综合了一个方位角区间内的信息,进一步提高了估计精度和稳健性。采用MSTAR数据集中的三类目标样本进行了实验与评估,结果表明文中提出的方法具有更高的效率实现高精度目标方位角估计。

Target azimuth estimation of synthetic aperture radar image based on block sparse Bayesian learning

doi: 10.3788/IRLA20210282

- Received Date: 2021-05-06

- Rev Recd Date: 2021-06-06

- Publish Date: 2022-05-06

-

Key words:

- synthetic aperture radar /

- azimuth estimation /

- block sparse Bayesian learning /

- linear weighting

Abstract: A target azimuth estimation algorithm of Synthetic Aperture Radar (SAR) images based on block sparse Bayesian learning was proposed. SAR images were highly sensitive to target azimuth, the SAR image with a special azimuth only highly correlate with those samples with approaching azimuths. The proposed method was developed based on the idea of sparse representation. First, all the training samples were sorted according to the azimuths to construct the global dictionary. Then, the sparse coefficients of test sample to be estimated over the global dictionary should be block sparse ones, that was the non-zero coefficients mainly accumulate in a local part on the global dictionary. The solved positions of the blocks effectively reflect the azimuthal information of the test sample. The block sparse Bayesian learning (BSBL) algorithm was employed to solve the block sparse coefficients and then the candidate blocks were chosen based on the minimum of the reconstruction errors. With the optimal block, the estimated azimuth was calculated by linearly fusing the azimuths of all the training samples in the block thus a robust estimation result could be achieved. The proposed method considered the azimuthal sensitivity of SAR images and comprehensively utilized the valid information in a local discretionary, so the instability of using a signal reference training sample could be avoided. Experiments were conducted on moving and stationary target acquisition and recognition (MSTAR) dataset to validate effectiveness of the proposed method while compared with several classical algorithms. The experimental results validate the superior performance of the proposed method.

DownLoad:

DownLoad: